Ethical Concerns in Artificial Intelligence Development

Artificial Intelligence (AI) has rapidly transformed various industries, from healthcare to finance to transportation. With the ability to process large amounts of data and make decisions autonomously, AI has the potential to revolutionize the way we live and work. However, as AI technology advances, so do the ethical concerns surrounding its development and implementation.

Privacy and Data Security

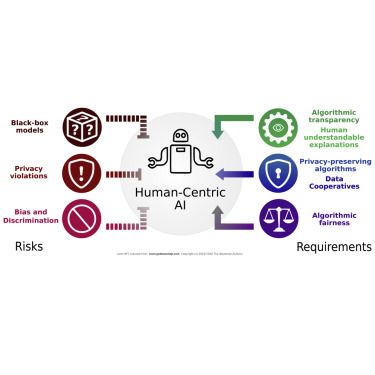

One of the primary ethical concerns in AI development is the issue of privacy and data security. AI systems require vast amounts of data to operate effectively, which raises questions about who has access to this data and how it is being used. For example, facial recognition technology has come under scrutiny for its potential to infringe on individuals’ privacy rights by tracking their movements without their consent.

To address these concerns, companies and developers must prioritize transparency and data protection in their AI systems. By implementing robust data security measures and obtaining user consent before collecting personal data, AI developers can ensure that their technology respects individuals’ privacy rights.

Algorithmic Bias

Another ethical concern in AI development is the issue of algorithmic bias. AI systems are often trained on large datasets that may contain biases, such as gender or racial bias. As a result, AI systems may inadvertently perpetuate these biases in their decision-making processes, leading to discriminatory outcomes.

To mitigate algorithmic bias, AI developers must carefully curate their training datasets and regularly audit their algorithms for bias. By implementing diversity and inclusion in the design and testing phases of AI development, developers can ensure that their technology is fair and equitable for all users.

Autonomous Decision-Making

The autonomous nature of AI systems raises ethical concerns related to decision-making and accountability. Unlike human decision-makers, AI systems operate based on predefined algorithms and may make decisions that have significant consequences for individuals and society as a whole. In cases where AI systems make errors or harmful decisions, it may be challenging to hold them accountable for their actions.

To address this issue, developers must design AI systems with built-in mechanisms for transparency and accountability. By providing users with insight into how AI systems make decisions and creating channels for redress in case of errors or harmful outcomes, developers can ensure that their technology upholds ethical principles in decision-making.

Conclusion

As AI technology continues to advance, it is crucial for developers and companies to address the ethical concerns surrounding its development and implementation. By prioritizing privacy and data security, mitigating algorithmic bias, and ensuring transparency and accountability in decision-making, AI developers can create technology that benefits society while upholding ethical values.

In conclusion, ethical considerations must be at the forefront of AI development to ensure that technology is used responsibly and ethically. By addressing these concerns proactively, we can harness the transformative power of AI while safeguarding individuals’ rights and promoting equity and fairness in society.